IMO they’re way too much fixated on making a single model AGI.

Some people tried to combine multiple specialized models (voice recognition + image recognition + LLM, + controls + voice synthesis) to get quite compelling results.

I’m just impressed how snappy it was, I wish he had the ability to let it listen longer without responding right away though.

I wish I had that ability too.

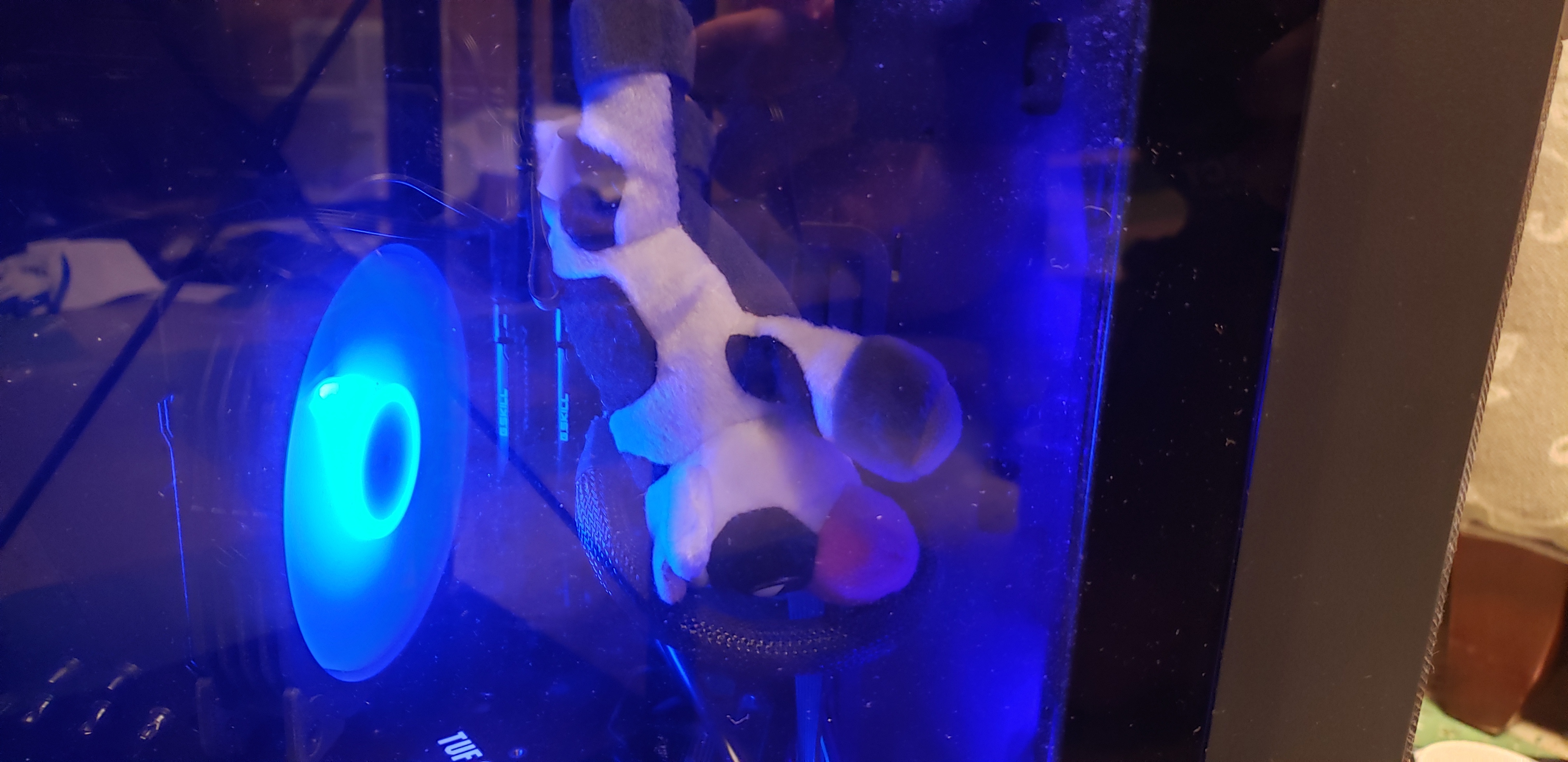

If you’re the programmer, it’s not hard to use a key press to enable TTS and then send it in chunks. I made a very similar version of this project, but my GPU didn’t stream the responses nearly as seamlessly.

Sorry, I meant the real life me, crippling audhd and all. But I’m also not a programmer.

I mean I get the DeepSeek launch exposes what NVIDIA and OPENAI have been pushing as the only roadmap to AI as incorrect, but doesn’t DeepSeek’s ability to harness less lower quality processors thereby allow companies like NVIDIA and OPENAI to reconfigure expanding their infrastructure’s abilities to push even further faster? Not sure why the selloff occurred, it’s like someone got a PC to post quicker with a x286, and everybody said hey those x386 sure do look nice, but we’re gonna fool around with these instead.

The reason for the correction is that the “smart money” that breathlessly invested billions on the assumption that CUDA is absolutely required for a good AI model is suddenly looking very incorrect.

I had been predicting that AMD would make inroads with their OpenCL but this news is even better. Reportedly, DeepSeek doesn’t even necessarily require the use of either OpenCL or CUDA.